One day I woke up and Namics had deleted all our blogs including more than 1000 posts written by me (my first is dated April 8th 2004: “Optimerung für unseren Planeten”).

So here was my project: Ask IT-Services for the backup (thank you Patrick!) and migrate everything to stuker.com.

The following tips are my first steps to write a habilitation treatise about juggling with WordPress Imports ;)))) and they are intended for a tech reader… If you are no Techie go and read the result on stuker.com/blog (and tell me what I still have to fix).

Starting point

The systems were migrated multiple times before. Including from Movable Type to WordPress. So client code and URLs were very divers and partly even broken. And I didn’t have access to a running site to use an existing plugin or a crawler. What I had was a file system and two XML export of the posts performed on WordPress 4.0

The plan in short

- Extract links to all owned assets referenced in the two XMLs

- Rewrite all old domains to stuker.com

- Normalize path to /wp-content/uploads/

- Find all assets from step 1 and copy them in a folder structure know from step 3

- Upload all assets to stuker.com

- Import the two XMLs to stuker.com

- That’s not all folks

Extract links to all owned assets referenced in the two XMLs

I decided to use grep for that purpose and generated a consolidated textfile that showed one fully qualified URL per line.

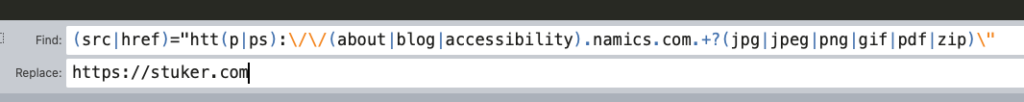

grep -Eoi '(src|href)="htt(p|ps):\/\/(about|blog|accessibility).namics.com.+?(jpg|jpeg|png|gif|pdf|zip)\"' *.wordpress.xmlMeaningful domains were about.namics.com, blog.namics.com and accessibility.namics.com (about.namics.com was completely lost) and I was hunting for src and href attributes only. In addition I was looking for the file extensions jgp, jpeg, png, gif, pdf and zip.

The option E stands for the egrep syntax, i for ignore case in the filenames and o to only output the matching string (and not the whole line).

The “ at the end limits to syntactically correct attributes (in contrast to links in the text) and .+? is a non greedy match to get the shortest hits. The output was my unexciting work list looking like this.

src="http://blog.namics.com/2006/goog-lab-access-thumb.png"

src=http://accessibility.namics.com/uploads/2009/eafra-karte-taborder-thumb.png

href="http:// accessibility.namics.com /uploads/2009/eafra-karte-taborder.png"

src="https://blog.namics.com/import/i-f8cfd74a016575a3b5bcba472545c25e-000011-1.gif"

...Rewrite all old domains to stuker.com

This was straight forward. I used search and replace in Sublime Text (with the same regex pattern) to change all URLs to https://stuker.com.

Normalize path to /wp-content/uploads/

As you may have spotted the path to the assets had different formats. Using Sublime Text again I normalized to /wp-content/uploads/ with multiple rules such as

Find all assets from step 1 and copy them in a folder structure know from step 3

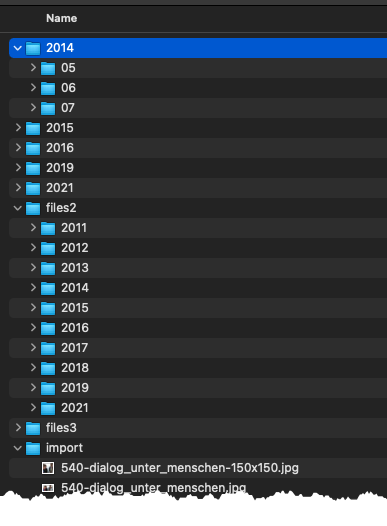

This step proved a little tricky but since a wanted to learn Python it was a nice exercise. So I switch language and wrote a script that searched the file system (where the assets were distributed on a myriad of folder because of WordPress Network, Movable Type and configuration changes over time) for the first match of the file in my hitlist.

Step one was to find split the text in the hitlist to find the asset name and step two was a file search followed by a copy to the target filestructure. Here the two functions:

def splitname(path):

pattern = re.findall(r"(^.+?stuker\.com)(/.+/)(.+)(\")", path, re.I)

return pattern

def findfile(name, fspath):

for root, dirs, files in os.walk(fspath):

if name in files:

return os.path.join(root, name)And a simple main loop

if __name__ == '__main__':

filelist_ = open(filelist, "r")

for line in filelist_:

linematch = splitname(line)

linematchlist = re.findall(r"\'.+?\'", linematch)

filename = linematchlist[2]

filepath = linematchlist[1]

srcfilepath = findfile(filename, source)

if srcfilepath is not None:

targettpath = target + filepath + filename

shutil.copyfile(srcfilepath, targettpath)

else:

print("Not found: ", filename, filepath)

filelist_.close()As you see I struggled with the (implicit) data formats such as tuples and lists but I just started Python ;). A output I got an error list that is basis for manual brush up which still is ahead of me. About 5% of the files were missing .

Upload all assets to stuker.com

Thank you Cyberduck.

Import the two XMLs to stuker.com

This was a breeze using the integrated importer in WordPress which in addition attributed all the posts to my existing user.

That’s not all folks.

As I said about 5% of the files are missing and because of the internet aging badly (people should stick to Tims advices) so there is still some manual brush-up. An since I decided not to import the assets in WordPress’ image library (using WordPress CLI) I have to upload again and again using Cyberduck.

Thank you Noël for a sanity check of my plan and thanks for all feedback. And be aware of flashback Friday notes mentioning old posts ;).